Distributed systems rarely fail in loud, obvious ways. They fail quietly. Jobs run at the wrong moment. Logs stop lining up. Alerts arrive too late to be useful. Replication drifts out of sync. In many cases, the root cause is not a bad deployment or a broken network, but incorrect time assumptions spread across machines that no longer agree on what “now” means.

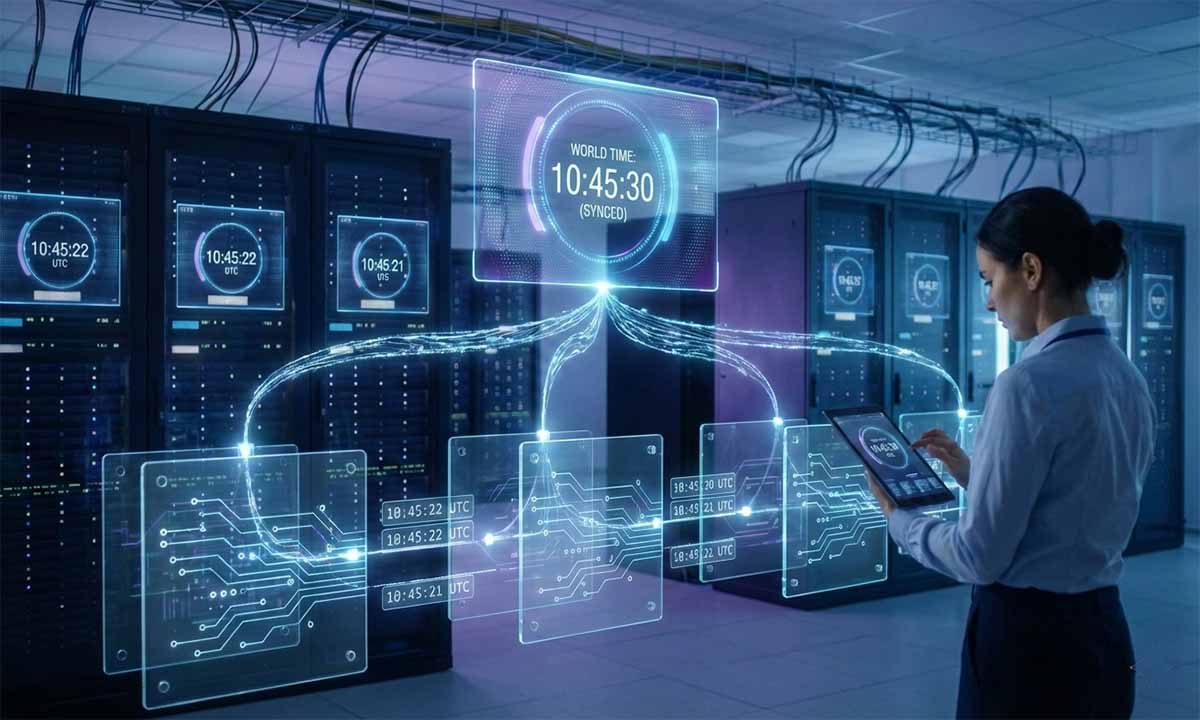

Accurate world time is essential for distributed systems because scheduling, logging, replication, and security all depend on a shared clock.

Local system time drifts, pauses, and changes with daylight saving rules, creating silent failures across regions. Using a reliable world time source replaces fragile local assumptions with a consistent reference, keeping cron jobs aligned, logs sortable, and system behavior predictable at scale while reducing operational risk and investigation time.

Time as shared infrastructure, not a local setting

Most engineers learn to distrust networks long before they learn to distrust clocks, yet clocks are just as fragile in real systems. They depend on hardware oscillators that drift over time, they pause during virtual machine suspension, and they change when administrators adjust settings or apply patched images.

In a single server setup, these weaknesses often remain hidden. In distributed systems, they surface as coordination bugs: a job scheduled on one node runs while another still believes it is yesterday, logs appear out of order, and cache entries expire too early in one region and too late in another. Accurate world time reframes time as shared infrastructure rather than a local setting. It becomes a service instead of a guess, allowing systems to rely on a neutral authority to determine the current time.

Why local clocks fail in modern infrastructure

Local clocks were designed for machines that stayed powered on, ran one workload, and rarely moved. Modern infrastructure violates every one of those assumptions. Instances are short lived. Containers migrate. Hosts reboot automatically. Virtual machines pause and resume without warning.

- Hardware clocks drift at different rates.

- Virtual machines pause during host maintenance.

- Containers inherit host time without isolation.

- Daylight saving rules update at different moments.

- Manual corrections introduce human error.

- Boot order affects when synchronization starts.

- Network latency delays corrections.

Each issue alone may seem minor. Together, they make local time unreliable as a system wide reference.

Scheduling breaks first when time is wrong

Cron jobs are often the earliest warning sign of time related failures. A schedule that works on a single machine starts to misbehave once it spans regions. A daily job may run twice. A weekly task may skip an execution. DST transitions introduce especially subtle bugs, including days with missing hours or repeated ones.

To avoid these failures, many teams stop calculating schedules against local clocks entirely. Instead, they query a shared reference such as the World Time JSON API, then compute execution windows based on a consistent definition of current time. This turns scheduling into a deterministic calculation rather than a regional guess.

This approach fits well with established BSD practices, especially those described in BSD crontab scheduling, where predictable execution depends on stable time rather than local system state.

Logging and audit trails depend on ordering

Logging and audit trails rely on correct ordering to remain meaningful. Logs tell a system’s story through timestamps, and when those timestamps disagree, the narrative breaks down. In distributed logging pipelines, events from many services are merged and sorted based on time. When clocks drift, errors can appear before the requests that caused them, security events may show up after remediation has already occurred, and investigations slow to a halt as timelines become unreliable.

Accurate world time restores this ordering by giving every service the same temporal reference. Events can be tagged against a shared clock, making correlation dependable again. This principle aligns closely with best practices outlined in BSD system logs, where time consistency is essential for troubleshooting and compliance.

Replication, caching, and cross region coordination

Time also governs how data moves through distributed systems. Replication lag, cache expiration, and conflict resolution all depend on timestamps. If one region believes data is newer while another believes it is stale, subtle corruption can occur.

Using a single time reference allows systems to reason clearly about freshness, expiry, and ordering. Caches invalidate consistently. Replicas agree on what came first. Failover logic behaves as expected.

A practical playbook for reliable time handling

- Select one authoritative time source.

- Store all timestamps in UTC.

- Convert to local time only at presentation boundaries.

- Compute schedules using world time.

- Tag logs using the same reference clock.

- Handle API failures with safe fallbacks.

- Test across DST transitions explicitly.

Code examples for distributed systems

Fetching current world time for logging

import json

import urllib.request

from datetime import datetime

def fetch_world_time():

with urllib.request.urlopen("https://time.now/api/timezone/utc") as response:

payload = json.loads(response.read().decode("utf-8"))

return datetime.fromisoformat(payload["iso"])

timestamp = fetch_world_time()

print("Event logged at", timestamp.isoformat())

This pattern avoids reliance on the local system clock and keeps logs comparable across nodes.

Scheduling a task using shared time

from datetime import timedelta

now = fetch_world_time()

next_run = now.replace(hour=0, minute=0, second=0) + timedelta(days=1)

delay_seconds = (next_run - now).total_seconds()

The calculation remains stable even during DST transitions.

Error handling and fallback behavior

Time services should be treated like any external dependency. Systems must assume temporary failures and plan accordingly.

- Apply strict request timeouts.

- Cache recent responses briefly.

- Retry with controlled backoff.

- Log failures clearly.

- Fallback to last known good time.

- Avoid blocking critical paths.

- Monitor latency and error rates.

Testing time logic before it fails in production

Time related bugs often appear months after deployment. Tests should simulate the future, not just the present. Freeze time during tests.

Mock API responses. Validate ordering assumptions. Run scenarios that cross DST boundaries.

Security considerations tied to time

Authentication tokens, certificates, and replay protection all depend on accurate time. Drift can invalidate credentials or extend their lifetime beyond intended limits. Using a shared time reference reduces these risks and simplifies reasoning about expiration and renewal.

Operational consistency also benefits process supervision and automation, as described in BSD process management, where predictable timing prevents cascading restarts and failed retries.

Common failures and better approaches

| Problem | What breaks | Symptom | Better approach | How a world time API helps |

|---|---|---|---|---|

| Clock drift | Schedulers | Missed jobs | External reference | Consistent current time |

| DST changes | Cron rules | Double execution | UTC schedules | Handles transitions |

| VM suspension | Logs | Out of order events | Shared clock | Stable ordering |

| Region mismatch | Billing | Incorrect dates | Single authority | Unified time view |

| Manual fixes | Audits | Gaps | Automated time | Reduced human error |

| Mixed platforms | Coordination | Inconsistent behavior | API abstraction | Platform neutral time |

Building calmer and more predictable systems

Accurate world time removes an entire class of invisible bugs. It simplifies scheduling, restores trust in logs, and makes system behavior easier to reason about under pressure. Teams that treat time as shared infrastructure spend less effort chasing unexplained failures and more time improving reliability.

For historical background on how networked systems traditionally synchronize clocks, the overview of Network Time Protocol explains both its strengths and its limits.

If you want a practical next step, consider introducing World Time API by Time.now into one service and observe how coordination improves across the rest of the system.

No Responses